The national security state is the main driver of censorship and election interference in the United States. “What I’m describing is military rule,” says Mike Benz. “It’s the inversion of democracy.”

Tucker Carlson Interviews Mike Benz.

This blew Tucker away… It might blow you away too.

- The US government is giving millions to university labs and private firms to stop domestic US citizen opinions on social media.

- The National Science Foundation is taking a program set up to solve “grand challenges” like quantum technology and using it for the science of censorship.

- Government-funded projects are sorting massive databases of American political and social communities into categories like “misinformation tweeters” and “misinformation followers.”

The National Science Foundation’s “Convergence Accelerator Track F” Is Funding Domestic Censorship Superweapons

Article from Jan. 2023

Before we describe the “Science of Censorship” federal grant program that is the focus of this report, perhaps the most disturbing introduction is to watch how grantees of your tax dollars describe themselves.

In the promo video below, National Science Foundation (NSF) grant project WiseDex explains how the federal government is funding it to provide social media platforms with “fast, comprehensive and consistent” censorship solutions. WiseDex builds sprawling databases of banned keywords and factual claims to sell to companies like Facebook, YouTube, and Twitter. It then integrates this banned-claims databases into censorship algorithms, so that “harmful misinformation stops reaching big audiences.”

Transcript:

Posts that go viral on social media can reach millions of people. Unfortunately, some posts are misleading. Social media platforms have policies about harmful misinformation. For example, Twitter has a policy against posts that say authorized COVID vaccines will make you sick.

When something is mildly harmful, platforms attach warnings like this one that points readers to better information. Really bad things, they remove. But before they can enforce, platforms have to identify the bad stuff, and they miss some of it. Actually, they miss a lot, especially when the posts aren’t in English. To understand why, let’s consider how platforms usually identify bad posts. There are too many posts for a platform to review everything, so first a platform flags a small fraction for review. Next, human reviewers act as judges, determining which flagged posts violate policy guidelines. If the policies are too abstract, both steps, flagging and judging, can be difficult.

WiseDex helps by translating abstract policy guidelines into specific claims that are more actionable; for example, the misleading claim that the COVID-19 vaccine suppresses a person’s immune response. Each claim includes keywords associated with the claim in multiple languages. For example, a Twitter search for “negative efficacy” yields tweets that promote the misleading claim. A search on “eficacia negativa” yields Spanish tweets promoting that same claim. The trust and safety team at a platform can use those keywords to automatically flag matching posts for human review. WiseDex harnesses the wisdom of crowds as well as AI techniques to select keywords for each claim and provide other information in the claim profile.

For human reviewers, a WiseDex browser plug-in identifies misinformation claims that might match the post. The reviewer then decides which matches are correct, a much easier task than deciding if posts violate abstract policies. Reviewer efficiency goes up and so does the consistency of their judgments. The WiseDex claim index will be a valuable shared resource for the industry. Multiple trust and safety vendors can use it as a basis for value-added services. Public interest groups can also use it to audit platforms and hold them accountable. WiseDex enables fast, comprehensive, and consistent enforcement around the world, so that harmful misinformation stops reaching big audiences.

As WiseDex’s promo and website illustrate, they take tech company Terms of Services speech violations as a starting point – such as prohibitions on claims that Covid-19 vaccines are ineffective – and then construct large strings of keywords, tags, and “matching posts” to be automatically flagged by tech platforms’ algorithms:

In the below image from WiseDex’s censorship product offering, you can see their dashboard offers censors at tech platforms a percentage confidence level of tweets and posts to assess for violation of claims that Covid vaccines are ineffective. Below, for example, their AI tags a user’s posts as 80% likely to violate a policy against claims Covid vaccines have negative efficacy. WiseDex then provides a dashboard for censors to remove or attach a warning label to such posts.

So WiseDex works by building sprawling databases of forbidden claims that match Terms of Services violations for social and political topics like Covid-19 and US elections. And the Biden Administration, via the NSF, is providing start-up capital for tech tools to get social media platforms to censor more aggressively.

In previous reports, FFO has covered the whole-of-society censorship model used to fund, launder and coordinate censorship activities between the federal government and outside partners:

In the case of WiseDex, the censorship laundering works through NSF giving $750,000 in taxpayer-funded grants (plus another $5 million potentially available in the future) to disinfo lab at the University Michigan, which in turn creates a censorship technology product for the private sector social media platforms:

So with NSF censorship grantees like WiseDex, the end users of the government-funded tech product are the social media platforms who actually delete the flagged posts. With other NSF censorship grantees, like the Orwellianly named project “Course Correct”, the end-users of the government-funded tech censorship tools are politically like-minded journalists and fact-checkers who flag posts to social media platforms for deletion or demotion. There, the censorship laundering process works as follows:

In the video below, Course Correct explains its “Precision Guidance Against Misinformation” censorship offering:

Transcript:

There are good journalists and bad journalists, and some of us make mistakes. That happens. So, that’s a credibility issue. But when we have that already, mistrust of the public about what we do, when you have them going to alternative sources that are misleading them, sometimes on purpose, it is not good for us, in general, because we’re getting bad information.

Without a common set of facts to move from, it’s very difficult for us to solve the biggest problems that we have as a society. Course Correct is trying to nudge us into the direction of understanding and agreeing upon the verifiable truth for the foundational issues that we need to sort through as a society in order to solve the big problems that are currently vexing us.

We are building the core machine learning data science and artificial intelligence technology to identify misinformation, using logistics, network science, and temporal behavior, so that we can very accurately identify what misinformation, where misinformation is spreading, who is consuming the misinformation, and what is the reach of the misinformation.

The misinformation is coming from a separate part of the country or, you know, it is people with a certain perspective, a political view, who are sharing a certain misinformation. You want to be able to tailor the correction based on that because otherwise there’s a lot of research that says that actually corrections don’t help if you’re not able to adjust or tailor it to the person’s context.

And Course Correct has pioneered experimental evidence showing that the strategic placement of corrective information in social media networks can reduce misinformation flow. So, the experiments we are running are able to help us understand which interventions will work. And so by testing these different strategies at the same time, Course Correct can tell journalists the most effective ways to correct misinformation in the actual networks where the misinformation is doing the most damage.

So here, the federal government is paying a civil society group to build a “digital dashboard” to “help journalists detect misinformation, correct misinformation, [and] share message interventions containing the verifiable truth into misinformation networks.”

This means the US government is directly and deliberately sponsoring the construction of massive databases of “misinformation tweeters, misinformation retweeters, misinformation followers and misinformation followees.”

But it’s more than just names of US citizens in a wrongthink database for ordinary opinions expressed online. The “dynamic dashboard” will also reveal relationship dynamics about the US citizens, who communities they are a part of, and who they influence and are influenced by:

Currently, Course Correct primarily targets US citizens who are thought dissidents in two topic areas: vaccine hesitancy and electoral skepticism (i.e. Trump supporters who question mail-in ballots, early voting dropboxes, voting machine efficacy, or other election integrity issues).

In case the political bias at play here is not immediately obvious, Course Correct gives precise examples of the “political misinformation” its AI tools target in its video presentation, where it highlights “misinformation spreaders” in the persona of former President Donald Trump. During the 2020 election cycle, the demo video suggests, then President Trump pursued an election integrity lawsuit in the state of Wisconsin that Course Correct designated as being rooted in misinformation:

.

Per Course Correct’s NSF grant page:

This project, Course Correct—Precision Guidance Against Misinformation, is a flexible and dynamic digital dashboard that will help end users such as journalists to (1) identify trending misinformation networks on social media platforms like Twitter, Facebook, and TikTok, (2) strategically correct misinformation within the flow of where it is most prevalent online and (3) test the effectiveness of corrections in real time. In Phase II, Course Correct will partner with local, state, national, and international news and fact-checking organizations to test how well the Course Correct digital dashboard helps journalists detect misinformation, correct misinformation, share message interventions containing the verifiable truth into misinformation networks, and verifying the success of the corrections.

This project aims to (1) extend our use of computational means to detect misinformation, using multimodal signal detection of linguistic and visual features surrounding issues such as vaccine hesitancy and electoral skepticism, coupled with network analytic methods to pinpoint key misinformation diffusers and consumers; (2) continue developing A/B-tested correction strategies against misinformation, such as observational correction, using ad promotion infrastructure and randomized message delivery to optimize efficacy for countering misinformation; (3) disseminate and evaluate the effectiveness of evidence-based corrections using various scalable intervention techniques available through social media platforms by conducting small, randomized control trials within affected networks, focusing on diffusers, not producers of misinformation and whether our intervention system can reduce the misinformation uptake and sharing within their social media networks; and (4) scale Course Correct into local, national, and international newsrooms, guided by dozens of interviews and ongoing collaborations with journalists, as well as tech developers and software engineers. By the end of Phase II, Course Correct intends to have further developed the digital dashboard in ways that could ultimately be adopted by other end users such as public health organizations, election administration officials, and commercial outlets.

The Convergence Accelerator Track F Program

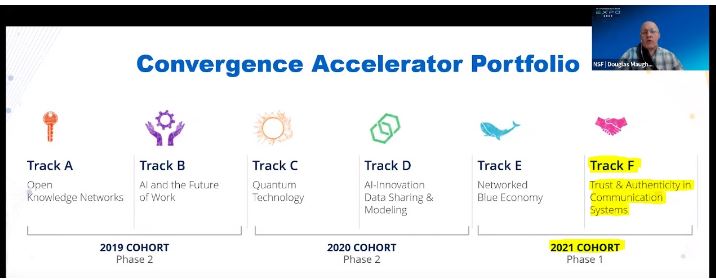

WiseDex and Course Correct are just two grantees in a cohort of roughly a dozen government-funded censorship technologies all being developed under the same program at NSF: the Convergence Accelerator “Track F” program.

NSF has an annual budget of roughly $10 billion. Its latest budget request for FY2023 called for an 18% increase in appropriations from Congress. Since NSF’s founding in 1950, it has served as a major federal source of academic research funds, comprising 25% of all government-supported basic research at US colleges and universities. In some fields of research, such as computer science, NSF makes up 90% of university research grants.

NSF traditionally funds basic academic research, not venture-capital style commercial products and services to sell to the private sector. But NSF’s funding vision expanded with the advent of the Convergence Accelerator program.

The Convergence Accelerator program at NSF was created in 2019 under the Trump Administration “to address national-scale societal challenges, integrating multidisciplinary research and innovation processes to transition research and discovery toward impactful solutions.”

In other words, certain fields of technology development required multiple different fields of scholarship and industry all converging on the same “grand challenge” together, and the Convergence Accelerator program was conceived to converge different science talents together toward a common purpose.

For the Trump Administration, the Convergence Accelerator program was used to accelerate development of complex scientific research fields such as quantum technology. The “Tracks” of the Convergence Accelerator program in 2019 and 2020 reflect this hard, physical focus.

When the Biden Administration took over in 2021, they put the Convergence Accelerator program to use through a new “Track” called “Track F”, which would be wholly dedicated to funding the science of censorship.

Track F uses the tagline of “Trust and Authenticity in Communication Systems.” But this is a euphemistic way of saying if trust in government and mainstream institutions cannot be earned, it must be installed.

By science.

A thinner pretext for the creation of military-grade domestic censorship technologies could scarcely be provided. The NSF 2022 Portfolio Guide simply says Track F was created because “Although false claims… have existed throughout history, the problems that they cause have reached critical proportions.”

That’s it. Misinformation has hit critical proportions – that’s why your tax dollars are paying for censorship technologies at NSF.

Here’s a round-up of the portfolio companies that have gotten government funding from Track F. Bear in mind each of these teams have received $750,000 in initial government funding for the Phase I prototyping, and are eligible for up to $5 million more in funding for the Phase II technical development:

- Analysis and Response Toolkit for Trust—Led by Hacks/Hackers, Analysis and Response Toolkit for Trust, or ARTT, assists online communities with building trust around controversial topics such as vaccine efficacy. Users receive helpful approaches to engage, navigate, and analyze information. The toolkit’s primary resource, ARTT Guide, provides expert-informed suggestions- for analyzing information and communicating with others to build trust. [Promo Video]

- Co-Designing for Trust—Led by the University of Washington, Co-Designing for Trust builds community-oriented infrastructure that supports underserved communities to design, collaborate on, customize, and share digital literacy approaches. Developed by academic researchers, community organizations, libraries, journalists, and teachers, Co-Designing for Trust re-imagines literacy to provide the cognitive, social, and emotional skills necessary to respond to problematic information. [Promo Video]

- CO:CAST—During crises, decision-makers are challenged by information overload that is often exacerbated by misinformation. Led by The Ohio State University, CO:CAST is an AI-based system that helps decision-makers manage their information environment. CO:CAST personalizes, curates and separates credible from less credible information, presents it contextually within existing workflows, and leverages community-academic-government partnerships to mitigate misinformation impacts. [Promo Video]

- Co·Insights—Led by Meedan, Co·Insights enables community, fact-checking, and academic organizations to collaborate and respond effectively to emerging misinformation narratives that stoke social conflict and distrust. Our easy-to-use, mobile-friendly tools allow community members to report problematic content and discover resources while cutting-edge machine learning analyzes content across the web to create valuable insights for community leaders and fact-checkers. [Promo Video]

- CommuniTies—Today’s news outlets are not always trusted as information sources by their communities. Led by Temple University, CommuniTies is changing the dialogue around distrust of media. Using an AI network science tool, CommuniTies provides actionable insights for local newsrooms to help them build digital lines of communication with their communities, preventing the spread of misinformation and disinformation. [Promo Video]

- Course Correct—Led by University of Wisconsin – Madison, Course Correct is a dynamic misinformation identification dashboard to empower journalists to identify misinformation networks, correct misinformation within the affected networks, and test the effectiveness of corrections. Designed by mass communication, computer scientists, engineers, and social media experts, Course Correct rebuilds trust in civic institutions while helping journalists tame the misinformation tide. [Promo Video]

- Expert Voices Together—Led by George Washington University, Expert Voices Together, or EVT, is building a rapid-response system to assist journalists, scientists, and other experts whose work is being undermined by coordinated online harassment campaigns. Modeled on best practices in trauma-informed crisis intervention, the EVT platform provides a secure environment for experts to receive support from their professional communities. [Promo Video]

- Search Lit—Led by Massachusetts Institute of Technology, Search Lit is a suite of customizable, human-centered, open-source interventions that combats misinformation by building the public’s capacity at scale to sort truth from fiction online. The team brings expertise in large scale learning, ethnography, and digital search education, with partners in military, healthcare, and public libraries. [Promo Video]

- TrustFinder—People need tools to filter misinformation and find trustworthy signals in a sea of noise and data. Led by the University of Washington, TrustFinder assists researchers in collaboratively de-risking information exchange by binding digitally-signed annotations to data on the web. TrustFinder empowers communities to co-create information structures, influencing systems and algorithms towards more human outcomes. [Promo Video]

- WiseDex—Social media companies have policies against harmful misinformation. Unfortunately, enforcement is uneven, especially for non-English content. Led by University of Michigan, WiseDex harnesses the wisdom of crowds and AI techniques to help flag more posts. The result is more comprehensive, equitable, and consistent enforcement, significantly reducing the spread of misinformation. [Promo Video]

Full Playlist

For a full YouTube playlist of each the Convergence Accelerator Track F censorship grantees, see this 21-video compilation of the grantees describing and demoing their censorship products

Deeper Dive: The DARPA Connection

One of the most disturbing aspects of the Convergence Accelerator Track F domestic censorship projects is how similar they are to military-grade social media network censorship and monitoring tools developed by the Pentagon for the counterinsurgency and counterterrorism contexts abroad.

In 2011, DARPA created the Social Media in Strategic Communication (SMISC) program “to help identify misinformation or deception campaigns and counter them with truthful information” on social media. At the time, with the Arab Spring in bloom, the role of social media in burgeoning political movements was of great interest to US military, diplomacy and intelligence officials.

DARPA launched SMISC with four specific program goals:

- Detect, classify, measure and track the (a) formation, development and spread of ideas and concepts (memes), and (b) purposeful or deceptive messaging and misinformation.

- Recognize persuasion campaign structures and influence operations across social media sites and communities.

- Identify participants and intent, and measure effects of persuasion campaigns.

- Counter messaging of detected adversary influence operations.

Readers may note the astonishing similarity between these four stated goals by DARPA in 2011 to the four stated goals of Course Correct by NSF for domestic purposes today.

For DARPA, AI tracking and neutralization of hostile speech online was a way of eliminating the reliance on luck to have an early-warning radar system for hostile foreign political activity in conflict zones or during crisis events. As the SMISC program founder wrote in 2011:

The tools that we have today for awareness and defense in the social media space are heavily dependent on chance. We must eliminate our current reliance on a combination of luck and unsophisticated manual methods by using systematic automated and semi‐automated [methods] to detect, classify, measure, track and influence events in social media at data scale and in a timely fashion.

The technology areas of focus for DARPA in 2011 read like a standard grant application for NSF’s Convergence Accelerator Track F in 2021-2023:

- Linguistic cues, patterns of information flow, topic trend analysis, narrative structure analysis, sentiment detection and opinion mining;

- Meme tracking across communities, graph analytics/probabilistic reasoning, pattern detection, cultural narratives.

- Inducing identities, modeling emergent communities, trust analytics, network dynamics modeling.

In October 2020, shortly before the US 2020 election, DARPA announced its INfluence Campaign Awareness and Sensemaking (INCAS) program that sought to “exploit primarily publicly-available data sources including multilingual, multi-platform social media (e.g. blogs, tweets, messaging), online news sources, and online reference data sources” in order to track geopolitical influence campaigns before they become popular.

Even the dates of DARPA’s social media censorship and monitoring dreams align with the NSF Convergence Track F projects. For example, the Track F program at NSF was created in September 2021.

That very month, in September 2021, DARPA simultaneously launched a new research project called Civil Sanctuary “to develop multilingual AI moderators to mitigate ‘destructive ideas’ while encouraging ‘positive behavioral norms.’”

It sounds, in many ways, like the exact promotion demo for WiseDex:

Is Civil Sanctuary a vehicle for social media censorship?

According to the opportunity announcement, Civil Sanctuary “will exceed current content moderation capabilities by expanding the moderation paradigm from detection/deletion to proactive, cooperative engagement.”

The announcement doesn’t rule out censorship via detection/deletion, but the program’s main focus will be on proactively engaging social media communities with multilingual AI moderators.

Why is DARPA launching this program?

According to the Civil Sanctuary announcement, “social media environments often fall prey to disinformation, bullying, and malicious rhetoric, which may be perpetuated through broader social dynamics linked to toxic and uncritical group conformity.”

In other words, the Pentagon’s research arm sees social media groups as having a hive mindset that bullies the community while promoting “disinformation.”

DARPA’s response is, “New technologies are required to preserve and promote the positive factors of engagement in online discourse while minimizing the risk of negative social and psychological impacts emerging from violations of platform community guidelines.”

Here’s what DARPA has to say:

“Civil Sanctuary will scale the moderation capability of current platforms, enabling a quicker response to emerging issues and creating a more stable information environment, while simultaneously teaching users more beneficial behaviors that mitigate harmful reactive impulses, including mitigating the uncritical acceptance and amplification of destructive ideas as a means to assert group conformity.”

NSF Convergence Accelerator Track F grantee WiseDex seems to serving the exact need DARPA was calling for the month it was funded — “scaling moderation capability” of social media posts that contain “violations of platform community guidelines” to “enable a quicker response” for censorship.

NSF Convergence Accelerator Track F isn’t just recycling DARPA’s military-grade censorship research for domestic political purposes — its also recycling DARPA personnel.

Dr. Douglas Maughan, the head of the NSF’s Convergence Accelerator program that oversees the Track F censorship projects, previously was a DARPA Program Manager for computer science and network information products. Dr. Maughan also previously worked at the National Security Agency (NSA) and the Department of Homeland Security (DHS) Science and Technology (S&T) Directorate as the Division Director of the Industry Partnerships Division within the Office of Innovation and Collaboration.

FFO has extensively documented how DHS’s public-private censorship partnerships played a critical role in the mass social media censorship of the 2020 election, the 2022 midterms, and the ability for average US citizens to make claims about Covid-19.

So it is therefore somewhat unsurprising that the technical program head of the Track F program at NSF is yet another former DHS S&T Directorate alum specializing in AI innovation, Mike Pozmontier. With Pozmontier and Maughan helming NSF’s social media speech control research, AI innovation in the field of homeland security has now turned fully into homeland censorship.

Deepening Of DOD Entwinement With Domestic Censorship

Oh course, as FFO has previously covered, the Pentagon has increasingly made forays into sponsoring domestic censorship well beyond the theoretical DARPA purview.

One shocking example is the Biden Administration’s Defense Department taxpayer-funded government contract in September 2021 worth $750,000 to Newsguard. Interestingly, September 2021 is both when the Track F program started at NSF and when DARPA launched its Civil Sanctuary military censorship project. $750,000 is also the exact amount of funding all Track F NSF grantees received for their Phase I seed grant.

Newsguard is perhaps the preeminent censor and demonetizer of independent news websites:

It is also noteworthy that the Pentagon’s contract funding Newsguard was for the task of identifying “Misinformation Fingerprints.” This “misinformation fingerprinting” is a common theme among Track F NSF grantees, such as Course Correct, WiseDex, TrustFinder, SearchLit, CommuniTies, Co-Insights, CO:CAST and AART.

This troubling convergence is made all the more disturbing by its virtually identical similarity, in the domestic political context, to the CIA and NSA’s “Birds Of A Feather” political tracking ambitions established in the 1990s for the purpose of counterinsurgency and counterterrorism:

Quoting Quartz in its excellent 2017 report, “Google’s true origin partly lies in CIA and NSA research grants for mass surveillance“:

The research arms of the CIA and NSA hoped that the best computer-science minds in academia could identify what they called “birds of a feather:” Just as geese fly together in large V shapes, or flocks of sparrows make sudden movements together in harmony, they predicted that like-minded groups of humans would move together online. The intelligence community named their first unclassified briefing for scientists the “birds of a feather” briefing, and the “Birds of a Feather Session on the Intelligence Community Initiative in Massive Digital Data Systems” took place at the Fairmont Hotel in San Jose in the spring of 1995.

Their research aim was to track digital fingerprints inside the rapidly expanding global information network, which was then known as the World Wide Web. Could an entire world of digital information be organized so that the requests humans made inside such a network be tracked and sorted? Could their queries be linked and ranked in order of importance? Could “birds of a feather” be identified inside this sea of information so that communities and groups could be tracked in an organized way?

By working with emerging commercial-data companies, their intent was to track like-minded groups of people across the internet and identify them from the digital fingerprints they left behind, much like forensic scientists use fingerprint smudges to identify criminals. Just as “birds of a feather flock together,” they predicted that potential terrorists would communicate with each other in this new global, connected world—and they could find them by identifying patterns in this massive amount of new information. Once these groups were identified, they could then follow their digital trails everywhere.

NSF Track F grantee Course Correct is now building a digital dashboard for domestic “misinformation” that seems to perfectly track the CIA-NSA-DARPA dream of the 1990s for tracking and neutralizing “birds of a feather” terrorist communities:

We are building the core machine learning data science and artificial intelligence technology to identify misinformation, using logistics, network science, and temporal behavior, so that we can very accurately identify what misinformation, where misinformation is spreading, who is consuming the misinformation, and what is the reach of the misinformation.

The misinformation is coming from a separate part of the country or, you know, it is people with a certain perspective, a political view, who are sharing a certain misinformation. You want to be able to tailor the correction based on that because otherwise there’s a lot of research that says that actually corrections don’t help if you’re not able to adjust or tailor it to the person’s context.

The fact that DOD grantees and NSF grantees both primarily target the two same sets of domestic actors for censorship — right-wing populist political speech and Covid-19 heterodoxy speech, is noteworthy as well:

The explicit censorship targeting of right-wing populist groups on social media by Pentagon and DARPA dollars is particularly noteworthy in light of the fact that the targeted movement is the precise faction in Washington who pushed for a $75 billion dollar reduction in the Pentagon’s budget this year. That DOD appears to be targeting the very political threat to its own pecuniary interests for domestic censorship raises disconcerting questions about the national security state’s potential usurpation of civilian political domains.

Foreign Counterterrorism Tools Repurposed For Domestic Info Control

After the initial upsurge in DARPA and NSF funding for social media network surveillance in 2011, DARPA began trying to perfect the art and science of censorship in 2014 — for the purpose of stopping ISIS and terrorist propaganda from spreading on social media.

For a reading round-up on this topic, see below:

- Pentagon Wants a Social Media Propaganda Machine

- Why the US Government Spent Millions Trying to Weaponize Memes

- US military studied how to influence Twitter users in Darpa-funded research

- Dems deploying DARPA-funded AI-driven information warfare tool to target pro-Trump accounts

- Technology once used to combat ISIS propaganda is enlisted by Democratic group to counter Trump’s coronavirus messaging

Now, counterterrorism and counterinsurgency censorship technologies perfected by DARPA are being handed off to NSF, in a somewhat dark and perverted callback to DARPA’s handoff to NSF of the WWW.

In fact, WiseDex directly brags in its own NSF 2022 Portfolio Guide description that it will bring to the domestic counter-misinformation space the exact same toolkit DARPA pioneered for counterterrorism:

The Global Internet Forum to Counter Terrorism (GIFCT), an industry consortium, maintains a database of perceptual hashes of images and videos produced by terrorist entities… Platforms can check whether images and videos posted to their platforms match any in the databases by comparing hashes. By analogy, a WiseDex misinformation claim profile functions like an image hash in those systems.

In dark mirror to the days of the early Internet, the university system is being roped in and recruited as a scientific and civil society mercenary army. Whereas in the 1990s, however, they were recruited for the purpose of Internet freedom and flourishing, now they are being recruited and funded for Internet control and censorship.

On a closing note, we stress that the NSF’s Track F censorship projects are still largely in their early or infant stages. Only a handful of Track F project like Course Correct have qualified for the additional $5 million in federal funding to fast-track them to full-fledged censorship juggernauts.

We are not yet beyond the point where this dystopian AI-powered domestic control can simply be put to bed.

But the hour is indeed getting late.

.